To start, and to explain what led me to look into them: I wanted to create a starfield, but with some important characteristics. First, it had to be pseudorandom, i.e. appear random but in fact be deterministic: any given input, such as a random seed, would produce exactly the same output; the upshot of this would be that I could spot an area of interest, leave it to voyage vast distances, and upon return find the same area of interest right where and how I had left it, turning a pseudorandom smattering of features into what in my experience, as a player, was a massive persistent universe. Second, in order to have areas of interest at all, it had to have local structure, which a truly random distribution hardly has at all, let alone to a stimulating degree.

The result was that I had to do some research into the field of pseudorandom noise. This page proved useful by providing a number of reference images of the basic results of various noise generation methods, including the popular Perlin noise and its variations as well as Worley noise and strategies such as "texture bombing." I could not only easily compare these against the result I wanted but compare them against the results my code was producing to see whether I was doing something wrong (or at least different from what I thought I was doing). I ended up settling on this:

The page describes it as "Worley Voronoi f2-f1, Euclidean distance metric." Decoded into more digestible terms, it means the following:

- Worley's algorithm was used.

- The result resembles a Voronoi diagram.

- The terms used in the algorithm were "f1" and "f2," and the result value was based on the difference between f2 and f1 (more below).

- Distances used in the algorithm were computed as Euclidean distances, i.e. "real" geometric distances in a straight line as calculated using the Pythagorean Theorem.

Random "seed" points are arranged in space (the black dots). Each point has a colored cell in the diagram all to itself, which represents all positions in space that are closer to that seed point than to any other.

The important feature for my purposes was the edges of these cells: along each edge, points have two seeds from which they are equidistant, and points near those edges are nearly the same distance from two other points - in other words, the difference between the closest seed point (which one could name "f1") and the second closest ("f2") is very small. Worley noise is the pattern generated by taking values such as this difference (or some other relationship between the nearest few seed points) and using them as result color values, probabilities, heights, etc. Worley noise is sometimes also cited as "Voronoi noise" or as "cell noise" or "cellular noise" (due to its production of cells that can resemble biological cells).

The simplest form of Worley noise is of course Worley f1 - just color the result based on how far away the nearest point is. I started with this just to make sure I was on the right track, but it naturally produced suboptimal results - just a pattern of round voids that gradually transitioned into dense areas with distance; so I shortly thereafter set upon trying a variety of computations such as the f2 - f1 technique illustrated above. In the end I discovered that I needed to in fact make use of the four nearest points so as to create a threadlike structure with "clumps" near where multiple cell boundaries intersected.

How I created this structure specifically is somewhat of a brute-force method: the noise values represent the probability that a galaxy could exist at that point. Random positions are chosen in 3D space, the noise value is calculated for that location, and then a random value is generated and compared against the noise value - if the noise value is higher than the random value, a galaxy is placed at that location, and if not the algorithm tries again until it either finds a valid spot or gives up.

The cell boundaries are perfectly straight lines, so with an infinite number of infinitely small galaxies, an unnaturally precise geometrical structure would be visible, but with a more conservative density, the random variation in chosen positions, plus the ability of galaxies to occasionally pop up far from cell boundaries, lent enough variation that I considered the results good enough:

Now, one might note, particularly if armed with knowledge about programming and algorithm performance, that checking the distances to all of the seed points every time a new galaxy is generated, multiple times or otherwise, is (I think) an O(n²) operation - the number of calculations getting done ("O") is related to the number of items involved multiplied by itself ("n²"). This is generally regarded in the programming community as "very inefficient and you should be ashamed of yourself."

Naturally I did notice the high performance cost when there were a lot of seed points involved, and I saw a need to optimize. As it turned out, my later efforts to convert this starfield into a collection of cubic chunks proved very useful: each chunk could be made to contain only a few seed points and only concern itself with seed points within itself or the regions that would be occupied by its 26 neighbors. The operation retained its O(n²) performance, but dealing with only 27*[a small number, say 3 or 4] = maybe around 100 seed points meant that the performance cost was low, especially compared to the performance cost that might have been involved in implementing other sorts of optimizations.

A drawback from this is that only so much local complexity can exist within any given chunk - 3 or 4 seed points means only 3 or 4 voids or superclusters can be present - but for my purposes this was more than sufficient, especially considering that, should I need more complexity, I can simply add more chunks, and should I need a huge number of chunks, many of them would be very far from the player where only a few galaxies apiece would be sufficient. Programming is often an art of finding a local optimum of what works well enough for your needs without having to be too complicated to build, maintain, and troubleshoot.

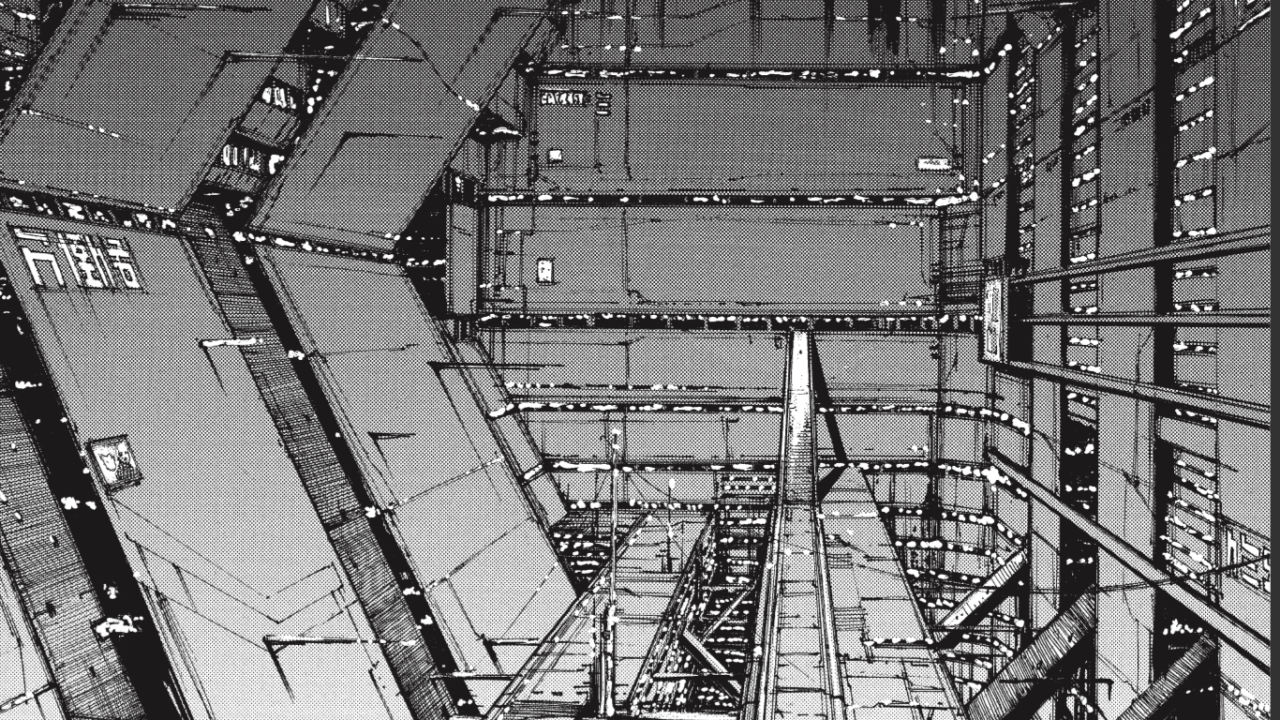

Of note is that Worley noise happens to be the same technique implemented in Space Engine, as illustrated above, although with a few differences: SpaceEngineer notes that he "distorted" the results using "FBM (fractional Brownian motion) noise" to raise the quality that little bit higher, and the octree-based structure of the game meant that optimization had to be done rather differently from the simple chunk-based strategy I used. Nevertheless I felt proud of independently discovering and exploiting the same computational principles and creating something that yielded similar results.