ShipBasher is still in "active" development, i.e. not abandonware at this time, but I've flitted temporarily to another project.

Have you ever been lost in a parking garage, perhaps late at night, searching for your car? Did you walk for what felt like hours, through seemingly endless levels and zones, all starting to blend together and look the same, and none giving any clear indication whether you were getting closer to your car or just more lost?

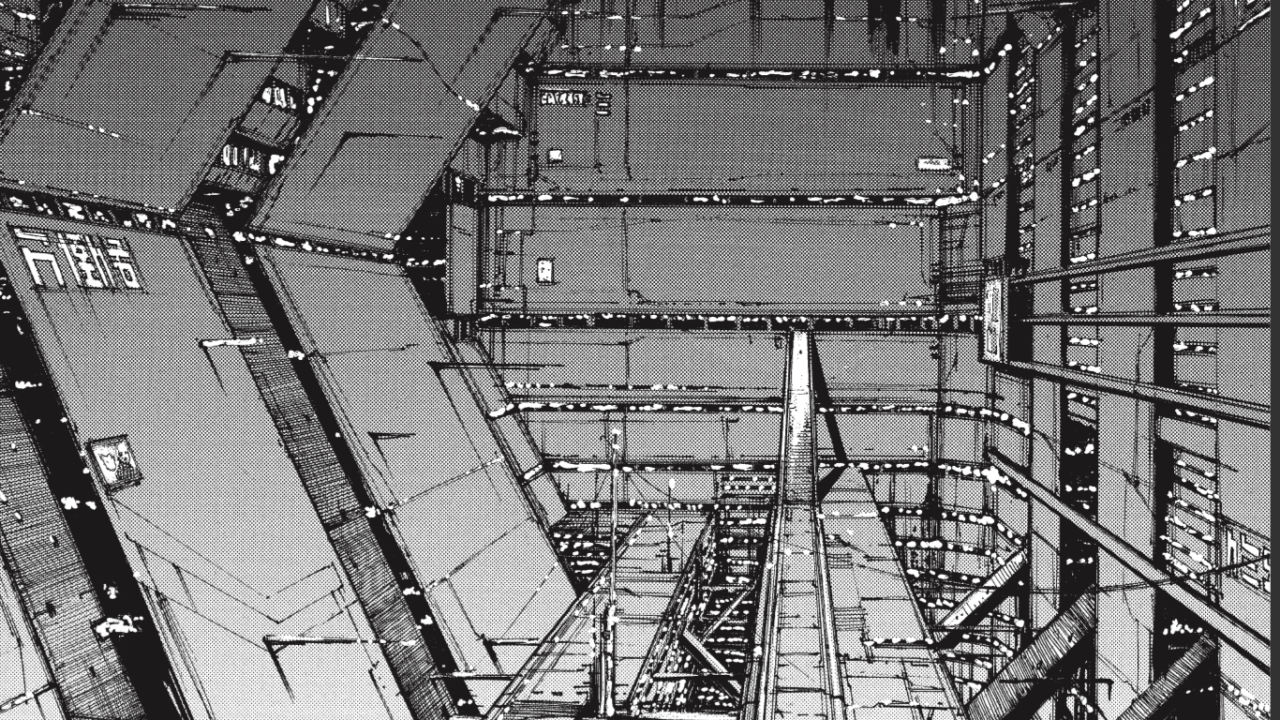

While playing with a test build of my Procedural Universe chunk system, I and some of my contacts noted that it evoked a massive parking garage, and before long an idea took shape to capitalize on this in an art game that would double as a more entertaining demo, which for lack of a better candidate I have given the self-explanatory name "Find Your Car."

Originally I considered naming the game "The Interview" for reasons that become evident below, but unfortunately the name was taken by a game actually about an interview.

In "Find Your Car," the player is to spawn in a car (wow!) within an endless, procedurally generated parking garage. The advertised goal at this point is to find a nice parking space, anywhere the player likes. Once parked, the player leaves the car.

Upon leaving the car, the player is alerted that there is an important job interview about to start and must hurry to it, racing the other candidates on the way, which take the form of simple NPCs (i.e. zombies) that chase the player and move toward the interview location, which is an unknown point at some distance away from the player's parking space as determined by the game's difficulty setting (if a high difficulty is chosen, the interview is very far away).

Once the location is reached, lo and behold! The player gets the job immediately - but don't congratulate yourself just yet. In order to get home, you must find your car. This is when the "real" game starts: the player must now wander through the near-infinite parking garage, perhaps retracing steps, in search of the car. The player no longer has to worry about enemies, but will encounter a variety of distractions including the all-too-tropey "scenic vistas," various other cars, and Easter eggs such as perhaps exotic vehicles (how did a train get in here?), the remains of past wanderers who failed to find their cars, etc.

(We ride eternal on the highways of Valhalla! WITNESS ME)

So that's the design. So far a closed alpha build of the game exists, in which there is a fully functional procedural parking garage, basic (if unstable) controls for a car and player character, pop-up messages about goals, and a victory message indicating how long it took to find the player's car.

Still to do are adding "rival" NPCs, all the cool Easter eggs I mentioned above, a bunch of polish, and solving a few playability issues such as bad luck leading to spawning trapped in a small area or being physically obstructed from reaching the interview location and unpredictable generation conditions leading to having to walk for several kilometers to reach the interview location even on the easiest of difficulties... or at the other extreme, finding it right smack next to where you parked:

In this image the interview location is indicated by the brown door in the background.

Did I mention needing a lot of polish? As it turns out, a humongous modular building comprises a lot of meshes and in turn a lot of polygons, which give my pitiable 6-year-old integrated graphics chip a hard time unless I make everything as simple as possible.

All the same, whilst playtesting this I was surprised to find how well it captured the mood of my past experiences wandering despondently through parking garages, so in a way the project is already a success.

Next time I hope to have an open alpha (or beta) build available and perhaps do a post-mortem on what I learned in the course of making this, how my chunk system matured during the process, what I might do going forward, etc.

.jpg)

.jpg)