|

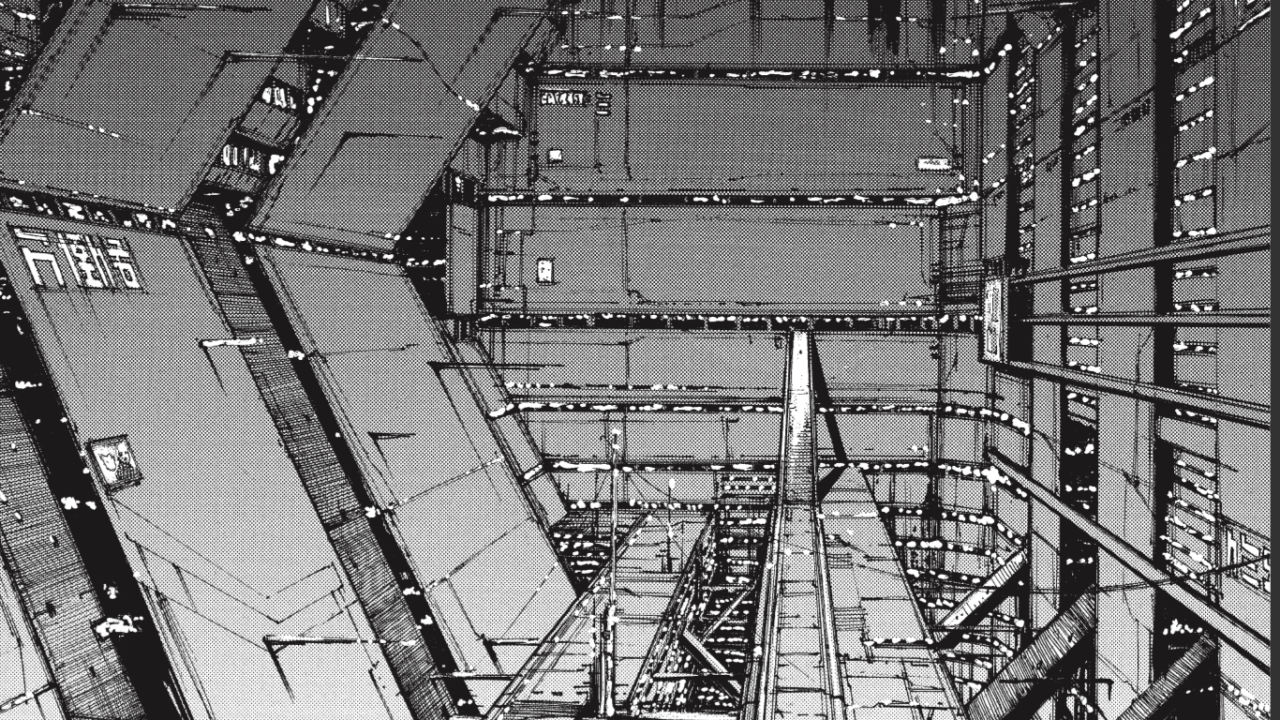

| ("BLAME!" by Tsutomu Nihei, chapter 7 page 28) |

As of my last post, my bridge generator had stepped out of the 2D realm into the wider (or should I say thicker? deeper?) world of 3D and gained the ability to work at angles and generate staircases. This should enable it to successfully build bridges in a wider variety of conditions, but viewed from above and strictly examining its functionality on a horizontal plane (that is, for whatever definition of "horizontal" is relevant), its capabilities haven't really changed. I've concluded that they need to change, however - randomized end points turn out to rarely fall into configurations amenable to the existing bridge generation logic, as I shall explain by recounting my efforts to explore the full possibility space.

Many game developers (or at least those who make tutorials) advise starting new endeavors by proverbially stepping away from the computer and using low-tech tools such as paper models or pencil and paper (whatever involves paper I guess). I confess I don't do this as often as these tutorials seem to suggest I should, but along my journey with this procedural generation project I've done so a fair number of times.

As before, I simplified the problem into one of connecting two locations on a line. Every bridge with two end points will necessarily have some line that would connect its end points, so if I base my coordinate system around this (i.e. pretend it's just a horizontal line of fixed length), I can skip all the reasoning around whether one point is behind the other, which one is on the right or left, etc. as long as the final code I write retains this agnosticism (e.g. don't hard-code anything based on the global X axis). The two locations on this line (represented below by circles and spheres) each have a facing direction (represented below by small arrows or cones). Drawing and contemplating a few sketches, I was able to identify six "classes" of bridge that could exist - of which my current algorithm could handle only one!

|

| Since my original notes are very poorly suited to being read on a screen, I recreated the important parts digitally. |

These classes are separated by whether the angles between the end point directions add up to more or less than 180 degrees, whether the end point directions are on the same side of the connecting line, and whether one or both end directions are facing away from the center point (i.e. have an angle from the center line of more than 90 degrees).

Only five classes are pictured because I examined the sixth (F) and determined that it should be impossible - it illustrated an "S" shaped bridge (explained in more detail below) connecting end points whose facing directions were on the same side of the connecting line (a situation that would inevitably put the bridge in class A or B and thereby not produce an S bridge).

My existing logic was able to handle class A easily enough; as the illustration shows, the end point directions and the connecting line together form a triangle, and the generator can proceed from there to find a good elbow position and connect everything.

Class B shouldn't be too complicated either - the generator simply needs to be adjusted to forgive angles in excess of 90 degrees when building its two elbow chains (the groups of curved segments at one end and at the elbow).

I'd like if my generator could be made able to handle all of these classes, though. For the other three classes, the angles themselves should be nothing the algorithm can't handle, but a new ingredient was needed - the bridge had to be able to curve twice, first from one end to some midpoint on the connecting line and then from that midpoint to the opposite end, forming a vague "S" shape. So far I've encountered no compelling reason not to simply make this midpoint be the halfway point between the end points (their arithmetic mean), so for now that's what I have done.

The generator now "plans" a bridge by examining the configuration of the end directions, identifying the class of bridge needed, and storing all of the important bridge data in a container class. This container class can itself contain two "sub-bridges" with their own sets of data; if an S bridge is needed, as in classes C, D, and E, the generator identifies the midpoint, computes suitable "average" directions leading out from it in opposite directions, and then plans out these two sub-bridges, each using the midpoint and one of the two end points. This container is then used in actual bridge generation, and if the sub-bridges are assigned, the task is split into two separate bridge generation tasks.

When working out how to calculate facing directions for the midpoint, I made sure that each facing direction was always on the same side of the connecting line as the end point toward which it pointed - thus, each of the two sub-bridges will have to fall into class A or B. While sketching out the problem, I determined that the properties of classes C, D, and E result in certain sets of possibilities for which classes the sub-bridges can take:

- Class C will have sub-bridges that always both fall into class A.

- Class D will have one sub-bridge in class A and the other sub-bridge in either class A or class B.

- Class E will always have one sub-bridge in class A and one in class B.

This means that for now, my existing code is sure to be able to handle generating sub-bridges for bridges in class C, and sure enough, once I had done a fair bit of debugging (added complexity tends to add exponentially more bugs), I was treated to the beautiful first sight of an S bridge forming on my screen:

As has surely been implied heavily enough in this post already, this new chunk of work I've given myself is still far from finished. I have yet to see if my logic can handle class B (and in the likely case it can't, upgrade it), investigate whether it can handle classes D and E, or plug it back into other systems such as the city generator I showed before to see if it still works or if new bugs have appeared - let alone begin tackling the task of (finally) wiring it up to the procedural chunk system. I look forward to detailing my (mis)adventures with those hurdles soon. But hey - at least now I can finally imitate that manga panel at the top of the page!